When it comes to professional Generative AI, or indeed any form of intelligence, the ultimate goal is to enhance decision-making. While Generative AI can be used to create product descriptions, advertisements, social media content, or holiday cards, business fundamentally revolves around managing limited resources. Questions like “What products shall we make?”, “Whom should we advertise to?”, “What is our positioning and what messages shall we send?”, and “Where to invest?” need to be answered first, as their answers have a direct impact on any content that a company creates.

OpenAI’s GPT and similar Large Language Models (LLMs) have been transformative, offering the ability to craft everything from student essays to corporate reports. Their capabilities are impressive, yet when deploying them in professional settings to answer questions, such as the ones above, limitations become apparent.

Primarily, LLMs lack context—imagine a complete newcomer trying to seamlessly join an ongoing conversation based only on the last snippet they’ve caught. The results can be hit or miss.

Additionally, the inner workings of LLMs are a black box. The reasoning behind a specific text generated by an AI model will inherently remain a secret, as the multitude of decisions involved in its creation are too complex for human comprehension. Unlike professional environments that prize traceable reasoning and structured decision-making, LLMs operate through a large sequence of probabilistic choices aimed at predicting the next likely word or phrase. This method has nothing to do with the deliberate, reflective thought process professionals employ when seeking truth and understanding.

Given these essential shortcomings, how can we make best use of LLMs for generative AI in a professional work context?

At the core, it’s vital to recalibrate our expectations, recognizing LLMs for their actual capabilities rather than overestimating their problem-solving abilities. Rather than tasking LLMs with short, but intriniscally complex queries that demand expansive responses, such as “What are my competitors up to?”, we should view them as sophisticated sentence constructors. LLMs excel at weaving pre-existing concepts and answers into cohesive narratives, not necessarily at devising solutions from brief, open-ended prompts. By leveraging LLMs in this manner, we harness their strength in text generation while acknowledging their limitations in generating understanding.

If LLMs are not generating the actual core of our answers, who is?

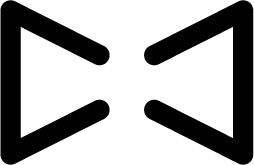

While we have been developing AI Agents for a while, more and more AI Agents emerge as the champions of automating knowledge work. AI Agents function as the ultimate data chefs—they gather ingredients (data) from various sources, prep the stove (the AI models), and cook up a dish (content) that matches the human needs. They sift through data, analyze it for patterns and correlations, and serve it up in a way that LLMs can digest. AI Agents don’t just use models, they look at the facts in specific data sources. AI Agents can consider the who, what, when, where, why, and how, creating the answers for the important questions that we face. AI Agents can use LLMs to analyze user inputs, data sources as well as to generate answers. AI Agents are the missing link, providing the context that LLMs need to generate relevant and reliable content.

Ensuring Quality in a Sea of Data

Instead of using only a single prompt, AI Agents are running hundreds or thousands of prompts to generate a single report. By providing LLMs with extensive context, the reliability and quality of outputs improves dramatically. Once the LLM has generated the data, AI Agents don’t just pat it on the back and send it on its way. AI Agents can scrutinize contents, check it against the facts, and ensure it’s up to the requirements. In this step, AI Agents become the new quality controllers or editors of the AI era, ensuring that the final product is not just factually accurate but also contextually sound. Moreover, AI Agents are developed in a way that makes their work and quality repeatable and transparent.

Our Experience of Applying AI Agents for Generative AI

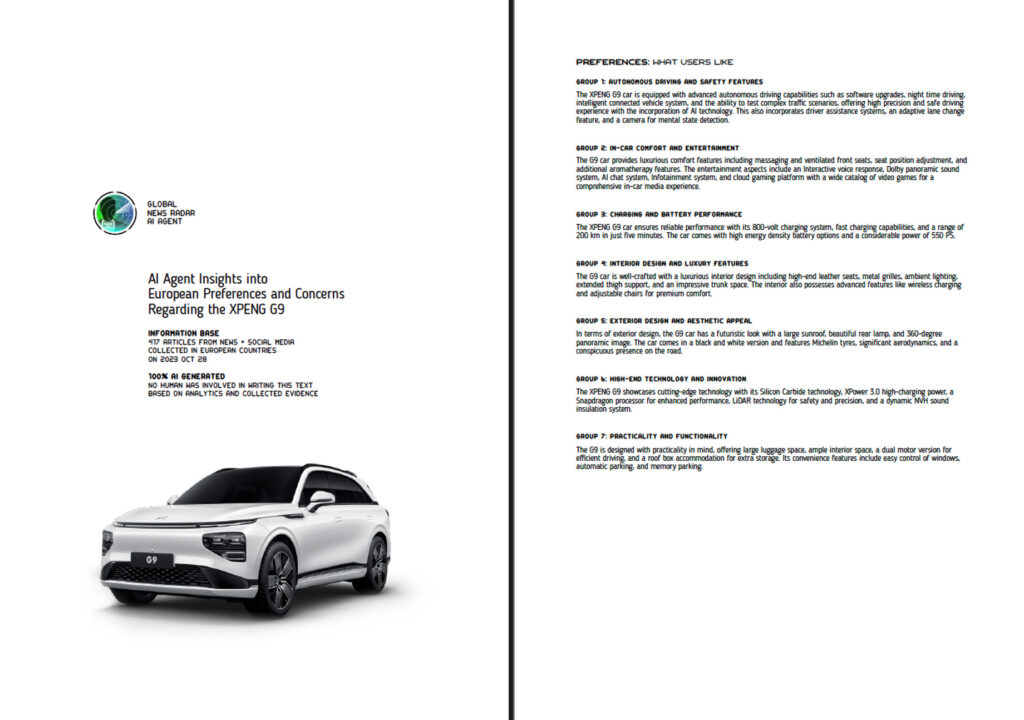

In our journey with generative AI, the application of AI Agents has been a game-changer. Take, for instance, our approach to customer feedback analysis. We employ AI Agents specifically tasked to sift through and analyze extensive customer feedback spanning 30 countries. This data, meticulously gathered by our News Radar AI Agent, is then distilled into concise two-page executive summaries by our Report AI Agent. Impressively, this entire data processing cycle is completed within about an hour, and there’s potential for further speed enhancements if needed.

But our use of AI Agents doesn’t stop there. We also apply a similar combination of Global News Radar and Report AI Agents for policy analysis. This showcases the remarkable ability of our AI Agents to not only read and comprehend hundreds of policy-related articles but also to categorize them into meaningful clusters. The agents then generate summaries that reference all original sources, ensuring both reliability and traceability.

This methodology allows us to provide precise, trustworthy, and repeatable insights into specific queries. Questions like “What do people in Europe appreciate about the car of my competitor?” or “What are new relevant regulations in Paris?” can now be answered following a structured, methodical process. By leveraging the power of AI Agents, we’re not just gathering data; we’re synthesizing it into actionable intelligence, revolutionizing how we approach and solve complex questions in our business.

Looking Ahead

Connecting AI Agents with LLMs isn’t just about making AI smarter; it’s about making it more useful, more aligned with our world. As AI continues to permeate every facet of our lives, from how we work to how we consume media, the need for AI that understands us—and our context—becomes ever more critical.

AI Agents are a significant leap toward an AI that can truly interface with our complex world. As this technology matures, we may find ourselves in a new age of digital intelligence—one where AI can predict, adapt, and respond not just with cold calculation, but with an informed, contextual understanding.

The promise of AI Agents is a future where AI understands not just the words we say but the world we inhabit—a future where AI becomes a true ally in navigating the ever-expanding universe of data. As we stand on the brink of this new era, one thing is clear: the journey of AI is just beginning, and the role of AI Agents will be pivotal in shaping its course.